Tag: database

Identifying the Extraneous Publishing AntiPattern

Posted by bsstahl on 2022-08-08 and Filed Under: development

What do you do when a dependency of one of your components needs data, ostensibly from your component, that your component doesn't actually need itself?

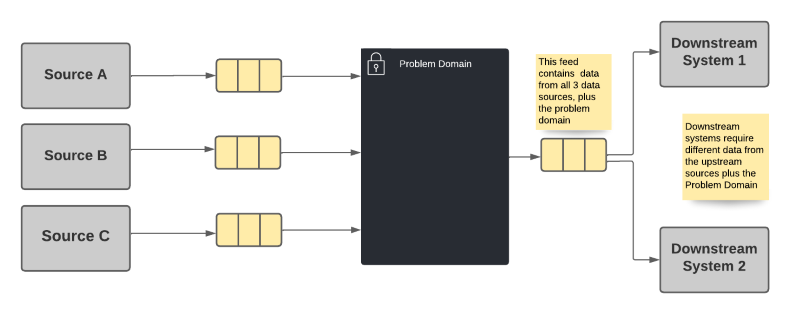

Let's think about an example. Suppose our problem domain (the big black box in the drawings below) uses some data from 3 different data sources (labeled Source A, B & C in the drawings). There is also a downstream dependency that needs data from the problem domain, as well as from sources B & C. Some of the data required by the downstream dependency are not needed by, or owned by, the problem domain.

There are 2 common implementations discussed now, and 1 slightly less obvious one discussed later in this article. We could:

- Pass-through the needed values on the output from our problem domain. This is the default option in many environments.

- Force the downstream to take additional dependencies on sources B & C

Note: In the worst of these cases, the data from one or more of these sources is not needed at all in the problem domain.

Option 1 - Increase Stamp Coupling

The most common choice is for the problem domain to publish all data that it is system of record for, as well as passing-through data needed by the downstream dependencies from the other sources. Since we know that a dependency needs the data, we simply provide it as part of the output of the problem domain system.

Option 1 Advantages

- The downstream systems only needs to take a dependency on a single data source.

Option 1 Disadvantages

- Violates the Single Responsibility Principle because the problem domain may need to change for reasons the system doesn't care about. This can occur if a upstream producer adds or changes data, or a downstream consumer needs additional or changed data.

- The problem domain becomes the de-facto system of record for data it doesn't own. This may cause downstream consumers to be blocked by changes important to the consumers but not the problem domain. It also means that the provenance of the data is obscured from the consumer.

- Problems incurred by upstream data sources are exposed in the problem domain rather than in the dependent systems, irrespective of where the problem occurs or whether that problem actually impacts the problem domain. That is, the owners of the system in the problem domain become the "one neck to wring" for problems with the data, regardless of whether the problem is theirs, or they even care about that data.

I refer to this option as an implementation of the Extraneous Publishing Antipattern (Thanks to John Nusz for the naming suggestion). When this antipattern is used it will eventually cause significant problems for both the problem domain and its consumers as they evolve independently and the needs of each system change. The problem domain will be stuck with both their own requirements, and the requirements of their dependencies. The dependent systems meanwhile will be stuck waiting for changes in the upstream data provider. These changes will have no priority in that system because the changes are not needed in that domain and are not cared about by that product's ownership.

The relationship between two components created by a shared data contract is known as stamp coupling. Like any form of coupling, we should attempt to minimize it as much as possible between components so that we don't create hard dependencies that reduce our agility.

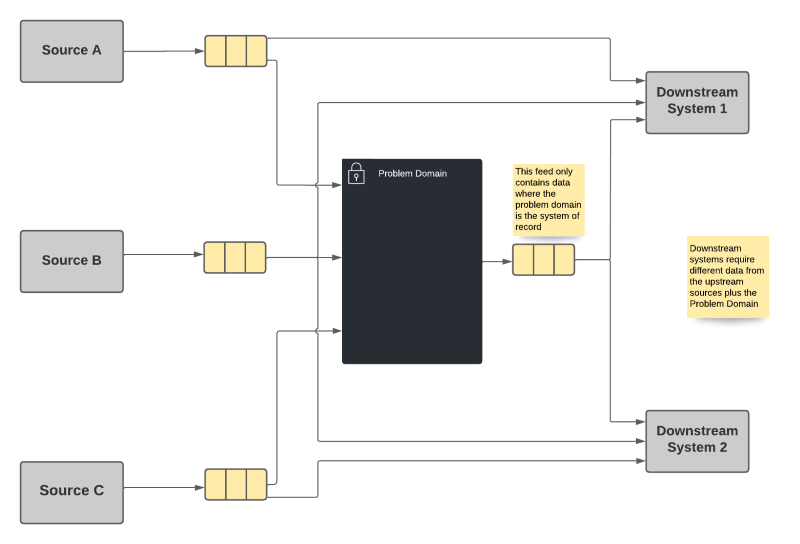

Option 2 - Multiplicative Dependencies

This option requires each downstream system to take a dependency on every system of record whose data it needs, regardless of what upstream data systems may already be utilizing that data source.

Option 2 Advantages

- Each system publishes only that information for which it is system of record, along with any necessary identifiers.

- Each dependency gets its data directly from the system of record without concern for intermediate actors.

Option 2 Disadvantages

- A combinatorial explosion of dependencies is possible since each system has to take dependencies on every system it needs data from. In some cases, this means that the primary systems will have a huge number of dependencies.

While there is nothing inherently wrong with having a large number of repeated dependencies within the broader system, it can still cause difficulties in managing the various products when the dependency graph starts to get unwieldy. We've seen similar problems in package-management and other dependency models before. However, there is a more common problem when we prematurely optimize our systems. If we optimize prematurely, we can create artifacts that we need to support forever, that create unnecessary complexity. As a result, I tend to use option 2 until the number of dependencies starts to grow. At that point, when the dependency graph starts to get out of control, we should look for another alternative.

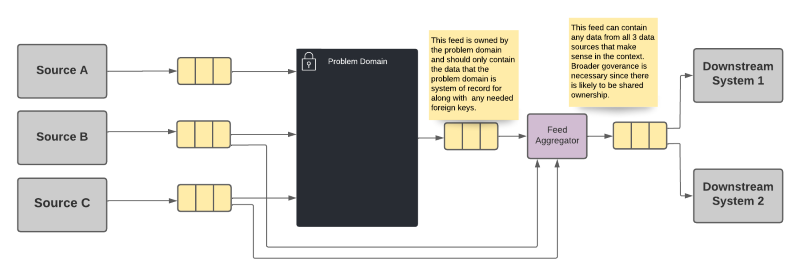

Option 3 - Shared Aggregation Feed

Fortunately, there is a third option that may not be immediately apparent. We can get the best of both worlds, and limit the impact of the disadvantages described above, by moving the aggregation of the data to a separate system. In fact, depending on the technologies used, this aggregation may be able to be done using an infrastructure component that is a low-code solution less likely to have reliability concerns.

In this option, each system publishes only the data for which it is system of record, as in option 1 above. However, instead of every system having to take a direct dependency on all of the upstream systems, a separate component is used to create a shared feed that represents the aggregation of the data from all of the sources.

Option 3 Advantages

- Each system publishes only that information for which it is system of record, along with any necessary identifiers.

- The downstream systems only needs to take a dependency on a single data source.

- A shared ownership can be arranged for the aggregation source that does not put the burden entirely on a single domain team.

Option 3 Disadvantages

- The aggregation becomes the de-facto system of record for data it doesn't own, though that fact is anticipated and hopefully planned for. The ownership of this aggregation needs to be well-defined, potentially even shared among the teams that provide data for the aggregation. This still means though that the provenance of the data is obscured from the consumer.

- Problems incurred by upstream data sources are exposed in the aggregator rather than in the dependent systems, irrespective of where the problem occurs. That is, the owners of the aggregation system become the "one neck to wring" for problems with the data. However, as described above, that ownership can be shared among the teams that own the data sources.

It should be noted that in any case, regardless of implementation, a mechanism for correlating data across the feeds will be required. That is, the entity being described will need either a common identifier, or a way to translate the identifiers from one system to the others so that the system can match the data for the same entities appropriately.

You'll notice that the aggregation system described in this option suffers from some of the same disadvantages as the other two options. The biggest difference however is that the sole purpose of this tool is to provide this aggregation. As a result, we handle all of these drawbacks in a domain that is entirely built for this purpose. Our business services remain focused on our business problems, and we create a special domain for the purpose of this data aggregation, with development processes that serve that purpose. In other words, we avoid expanding the definition of our problem domain to include the data aggregation as well. By maintaining each component's single responsibility in this way, we have the best chance of remaining agile, and not losing velocity due to extraneous concerns like unnecessary data dependencies.

Implementation

There are a number of ways we can perform the aggregation described in option 3. Certain databases such as MongoDb and CosmosDb provide mechanisms that can be used to aggregate multiple data elements. There are also streaming data implementations which include tools for joining multiple streams, such as Apache Kafka's kSQL. In future articles, I will explore some of these methods for minimizing stamp coupling and avoiding the Extraneous Publishing AntiPattern.

Tags: agile antipattern apache-kafka coding-practices coupling data-structures database development ksql microservices

Unit Testing the Data Tier

Posted by bsstahl on 2007-08-23 and Filed Under: development

Recently, both Jeffrey Polermo and Scott Bellware have written posts on codebetter.com positing that testing the Database should be avoided when doing unit testing. Specifically, Polermo points out that

Unit testing, by common definition, excludes external dependencies. It's not a unit test if we reach out and touch things.

While this may be a nice ideal, in reality it simply does not turn out to be the best way to do things in the majority of situations. Of course, to be fair, every situation is different and I'm sure there are many circumstances where it is better to pull-out database testing into integration tests or similar. In fact, there are perfectly valid reasons why we might not want to call it a unit-test if we test the database. However, for the majority of software projects (i.e. the 80% use-case), I belive it is not only legitimate, but best-practice, to test the database with our unit tests.

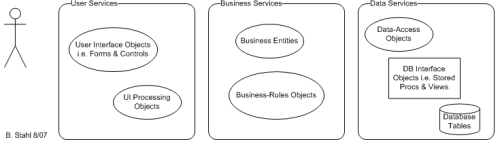

To understand the primary reasoning here, we need to go back to the fundamentals and look at our logical view of this 80% use-case software system.

The drawing illustrates many of the systems we create today in .NET. Of course, not all systems will fit into this model, but it certainly represents the classic 3-tier architecture used by so many of our projects. As this drawing shows, our data-access objects, which are often just thin wrappers over stored procedures, perhaps containing some ORM logic, are really part of the data-services layer. Even though these objects may physically reside alongside the business-layer objects, they really belong to the data-services. Thus, the assertion that we cross boundaries by testing the database when we test the data-services "unit" is not, in my mind, a valid concern. Yes, the tests will make out-of-process calls in getting to the database, and we will suffer a performance penalty as a result, but the fact remains that the database is not a dependency of the data-access objects, it is an integral part of that layer. Thus, in order to test that layer, we must test the database.

From a practical standpoint, this view of the application works out best in terms of testing it since the data-access objects are generally so thin. What is the point of testing those objects in isolation since they are basically wrappers most of the time? It is sometimes appropriate to write some tests for these objects on their own if they contain ORM type logic that can be tested as a unit, but for the most part, when we test the data-layer, what we want to test is that the proper data is returned. Validating that the proper data is returned from the data-tier requires testing the entire layer, including the database. Another factor here is that the database itself cannot generally be tested without some form of .NET object interface. As an example, if we were to try and write a unit test for a stored procedure in nUnit or VS Team System, we would need to have the same data-access code in our test, as we have in our data-tier object. Thus, it only makes sense to test those items as one unit. Perhaps down the road, since SQL server now has its own CLR, we may be able to do more testing of the database objects at that level and not need data-access objects. When that occurs, we should definately revisit our best-practices. But for now it is clear that the best way to test data-access is to test the data-services layer as a unit.

Please do not think that in any way I am suggesting we need to test the database in the same tests that are used for the business-layer objects. That would be a clear violation of the separation-of-concerns discussed by both Polermo and Bellware as well as many others and would best be described as an integration test because it crosses the boundaries in the diagram above. We should definately isolate the layers of our applications from each other in order to accurately test them. What I am saying is that the isolation of the data-tier should occur between the business-tier and the data-tier on the diagram. Thus, testing of the data-access objects includes testing of the database objects. The question then really becomes, how do we unit-test the data-services layer so that the tests execute as quickly as possible, do not have dependancies on existing data so that changes to the data cause tests to fail, yet still accurately and completely test that unit. I am attempting to put together a list of best-practices in this area and will make that the subject of a future post.