Tag: azure

Committing to Git from an Azure DevOps Pipeline

Posted by bsstahl on 2020-06-17 and Filed Under: tools

There are occasions, such as when working with static website generators, that you'll want to push some changes made in an Azure DevOps pipeline, back into the source Git repository. This process is simple enough, but since I have struggled to get it configured twice now, I am documenting the process here for your use, and my future use.

Azure DevOps pipelines typically contain two parts, although other configurations are possible. The two standards are:

- Get sources - gets the information to work with from a Git repository or other source control environment

- Agent Job - holds the tasks required to complete the pipeline

You'll need to take the following steps to configure the interactions between your source control provider and your pipeline:

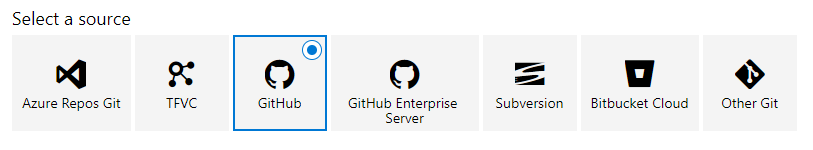

- Configure the Get sources section of the pipeline by selecting your source control provider from the list of options and then choosing the repository from the list within that provider. For most providers, you will need to supply credentials with access to the repository, although the pipeline may already have the basic access it needs to read from an Azure DevOps Repo.

- [optional] Configure an Agent Job to perform any cleanup of the repo necessary. When building a static website, I first delete all files from the target directory (the old static website files) so that only the files that are still needed are included in the final deployment.

Note: for all Agent Job steps that involve scripting, I use the Command Line task which allows me to execute my scripts in one of the native OS shells (Bash on Linux and macOS and cmd.exe on Windows). You could just as easily use the Powershell task, which is cross-platform or any number of other options.

Execute your build process. This is the step that generates the new files that will eventually be committed back into the source repository. Each static website generator has their own method for creating the site, see the documentation for your tooling for specifics. You can also execute custom tools or scripts here that modify files in the repository any way you'd like.

Execute the commit back to the source repo. This is the money step, where everything that has been done to this point is saved in the repository. As with previous steps, I use the Command Line task to execute the needed commands. My script is shown below. It is written for the Windows cmd.exe shell so commands that start with ECHO are log entries that will be included in the pipeline's execution log to help with troubleshooting and maintenance. This script uses a number of pipeline variables which take the form $(variableName) to make configuration easier. The git.email and git.user variables were defined by me in the Variables section of the pipeline, you will need to either configure those variables yourself, or substitute their values in the script. The Build.SourceVersion and Build.SourceVersionMessage variables are supplied by the pipeline and no action was required on my part to create or enable them.

An interesting thing to note about this script is the git push command. The full command git push origin HEAD:master is required in this case, rather than just a simple git push because, once the files are downloaded into the pipeline repo, the local repository is disconnected from the remote by the pipeline, possibly as a safety measure. We have to tell the local repo to push back to the remote HEAD, or else the push will fail. I suspect there is a way to tell the pipeline not to disconnect the head, but doing things this way, to my knowledge, has no ill-effects and is simple enough that it isn't really worth the effort for me to find out.

ECHO ** Starting "Git config for user: $(git.user)"

git config --global user.email "$(git.email)"

git config --global user.name "$(git.user)"

ECHO ** Starting "Git add..."

git add .

ECHO ** Starting "Git commit..."

git commit -m "Static site rebuild due to commit $(Build.SourceVersion) '$(Build.SourceVersionMessage)'"

ECHO ** Starting "Git push..."

git push origin HEAD:master

ECHO ** Ending Update remote git repo script

Finally, we need to give the pipeline user permission to write to the repository. This is where things can get a bit tricky and hard to find. The key configuration area within Azure DevOps can be found by going to the Project Settings.

For Azure DevOps repositories, select the Repositories tab and click on the [Projectname] Build Service User in the Users section. This will show a list of permissions that the build service user has. There are 4 that are of interest here: Contribute, Create branch, Create tag and Read. Some of those will probably already be enabled for you. I suspect only the Contribute needs to be added at this time, but I usually make sure all 4 are allowed.

For other repositories, things are even more complicated. Accounts need to be configured on the external source control service so that the Build Service User account has the permissions it needs to push to that repository. This may require an SSH configuration, an Auth token, or any number of other mechanisms depending on the source control provider. See that provider's documentation for the specifics.

Update 2022-07-13: There is an additional option that needs to be enabled in the pipeline's Agent Job.

☑ Allow scripts to access the OAuth tokenThis option must be checked so that the script can acquire the permissions to update the repository.

There are other ways to do all of this of course. One idea that intrigues me that I haven't tried yet is to have the build service submit a pull-request to the remote git repo. This would require an additional approval step before the changes are merged into the repo. For static websites where merging into master is the equivalent of publishing the site, this might give me the opportunity to review the built site before it is actually deployed.

Have you tried this pull-request method, or used this kind of technique with an non-Azure DevOps repo? If so, please let me know about it @bsstahl.

Tags: ci_cd git azure devops azure devops

PDC 2008 - Day 2

Posted by bsstahl on 2008-11-01 and Filed Under: event development

Day 2 was a more focused day for me at PDC 2008. After attending the morning keynotes, which included the first peeks at Windows 7 features as well as a terrific (as always) code-only presentation on programming against the cloud by Don Box and Chris Anderson, I headed over to the hands-on-labs where I spent the rest of the day working with Azure and creating applications that run in the cloud. I also received my Azure key and began the process of setting up a virtual machine to house the Azure tools.

Real-Time Updates on Social Media

Just a reminder that much of what is happening here at the PDC is being posted in real-time (or close to it) on social media. My updates can now be found @bsstahl.

Keynotes

Day 2 keynotes focused on the client side of Windows development. Not surprisingly, this included Windows 7 and WPF development improvements as well as Silverlight and ASP.NET development. Some things that caught my attention in the keynotes included features of Windows 7 like its ability to "live" on a domain, but still participate in a "Home Group" when your work laptop is brought home. Multi-monitor support also looks to be vastly improved including the ability to work multi-monitor in a remote desktop session. Scott Guthrie also introduced a number of new controls and tools for developing applications in WPF and Silverlight including a Ribbon control that appears intended to make your WPF apps look like Windows Forms apps.

The 2nd Keynote of the day was Don Box and Chris Anderson's fantastic presentation on developing applications that bring cloud computing into the enterprise. Clearly the most engaging of all of the Microsoft speakers, this duo put together, over the course of the 1.5 hour session, a series of services that ran both in the cloud and within the firewall, and linked the two securely, but in real-time. You would not be wasting your time if you were to view the video of this keynote online.

Hands-On Labs

The remainder of my day, after lunch, was spent in the hands-on labs working through the prescriptive samples provided by Microsoft for their Azure product. I was able to complete the first two of these labs which detailed the process of creating websites and services in the cloud that used local-storage and queues to perform a number of relatively simple tasks. These labs clearly answered my question from yesterday morning, with the answer that I expected. That is, an Azure "Web Role" is a web page or SOAP service that runs in the cloud. As such, everything (that I can think of) that I might need to run on my own servers, can be outsourced into the cloud, to provide the availability of virtually unlimited scale with amazing reliability. The still-unanswered question here is price, but since the CTP is free, I will continue to move in this direction until I find a reason to change course.

Day 3 Preview

Day 3 looks to be futures day, with the keynote focusing on Microsoft Research properties and technologies. Watch social media for all the action as it occurs.

Tags: pdc azure cloud social-media windows

PDC 2008 Day 1

Posted by bsstahl on 2008-10-29 and Filed Under: event

Windows Azure

As you've probably already heard, the big announcement coming out of PDC 2008 Day 1 was "Windows Azure", Microsoft's Cloud Computing Operating System. This is a very interesting story since it has implications, in theory at least, for developers working in any size organization, who need to provide public services that could potentially scale globally or massively. I won't spend time on the specifics right now since there are many who are more knowledgeable than me who have already written about it. I do however, have a few open questions on the topic, which I hope to have answered either in sessions today, or in the hands-on-labs. These include the pricing model (i.e. whether it will really be affordable for the "garage developer"), as well as what actually constitutes an Azure "web role". If, as I suspect, a web role can be a SOAP service or an ASP.NET web page, then the model makes a lot of sense to me and I will definitely be spending some time becoming familiar with the features and capabilities of this tool. I have sketched-out a simple application model that I hope to implement, either in the hands-on-labs or in the online community preview, sometime today. Since I have not yet been granted access to the public CTP, I suspect this will have to occur in the hands-on-labs.

The Future of .NET Development

The other major topic of the sessions I attended during day 1 was the future of development on the .NET platform. Specifics here included details of Visual Studio 2010 as well as a fantastic language futures talk given by Anders Hejilsberg, the father of C#. According to Anders, "The major theme of C# 4.0 is Dynamic Programming" which will allow C# applications to interact with dynamic languages such as JavaScript and Ruby, as well as providing dynamic typing features within C# itself. While, in most cases, I wouldn't (and I think Anders wouldn't) recommend using dynamic typing mechanisms, there are times where it is the most appropriate way (sometimes the only way) of performing the task at hand.

Another feature of future versions of C# include the concept of the compiler as a service. That is, the C# compiler, sometime down the road, is expected to be made available within the application model, useable by applications. We have had other methods of dynamically generating code in the past, but no model nearly as compelling as utilizing the same compiler Visual Studio uses, as a component of the .NET framework.

Sessions Available Online

We are being told that videos of every session will be available online via http://www.microsoft.com/pdc 24-hours after the session. From day 1, I definitely recommend checking out the keynote as an interesting, although far from complete, overview of Azure. I also recommend Anders' talk on C#. There was one session that looked interesting that I couldn't get into called "C# IDE Tips and Tricks" that seemed interesting which I will be checking-out online within the next week or so.

Day 2 begins...now.